I'm fortunate enough to work at a lovely company; one that has agreed to me working part-time. I now have a day a week, for 6 months, to do with as I wish. That's roughly 24 days. I have 24 days to write my own 3D game engine!

It's one of these things, the world doesn't need any more game engines, but that's not really the point. I've been interested in computer graphics for a very long time, and visual art for even longer! I've always wanted to make my own engine, just to see if I can really. It's one of these 'bucket-list' items for me. I've had many false starts - several abandoned projects written in C++, OpenGL and what not. The closest I got was a library called pxl.js, which I wrote in CoffeeScript, a dialect of Javascript. I used it in a couple of professional gigs, most notably the Equatorie Project I did a while back.

The problem I had with WebGL though, is that it's quite far removed from the hardware. It didn't feel like real computer graphics somehow. That sounds a bit sniffy and elitist perhaps but I think there's a ring of truth to it when it comes to the technical side. There's simply much more you can do with a GPU when you remove some of the layers - more to learn and more to gain, if that's what you are after. And I reckon I am. I'd go back to WebGL one day, possibly using Typescript instead, but that's for another time. Besides, there are quite a few WebGL frameworks out there. I was never a big fan of three.js - indeed, it was my experience with three.js that led me to make pxl.js - but I must admit, it's an impressive achievement. Writing a WebGL engine is a bit of a warm-up I think. I don't doubt that I'll use bits from pxl.js in the overall design.

What I'm aiming for is an engine I can use to make a simple game, or to use as a base for further creative endeavours. A simple tool I can play around with, maybe get something published? Probably something along the lines of Cinder, Openframeworks or Island. I'm also going to do this in-the-open - publishing to github as I go, and writing up my progress here.

I should define clearly what the engine needs to do and what it will include, and perhaps more crucially, what it will not. These requirements derive from what I want to do with the engine. In the past, I've made the mistake of just hacking away on something generic, with no end goal in mind. I think this time, I'll do things differently and start thinking about what this tool is for.

The final engine should be able to do the following:

I'd like to build a basic game with this. I'm thinking something along the lines of a Dungeon Crawler, or maybe a text adventure with 2D and 3D elements, a bit like Citizen Sleeper but a tad simpler. I think this should be possible with some basic features. We might need some animation too, but basic stuff.

I do enjoy making Demos, as part of the Demoscene. It would be nice if I could have a playground where I could just test out some basic ideas. One example would be to load a screen sized quad and do some Raymarching. This would mean that swapping out shaders on the fly would be essential.

It would be nice to load up some cool models like the Sponza Atrium or maybe some of my own 3D scans, and then wander around them. That means some support for loading various 3D model formats.

Generally, mucking about with graphics but having the tools to just tinker is what I'm after here I think.

I'm keen to look towards the future and Gaussian splatting seems cool! I've done something similar in my PhD work. In a nutshell Gaussian Splats (and related NeRF) are all about using points (or in this case, blurry points) to create a 3D scene, instead of triangles. There are a number of advantages and disadvantages to this approach but it ties in nicely to A.I. You can generate realistic 3D scenes from a small number of photographs. Programs like Scaniverse allow you to create such scenes with your phone. I think having some support for this would be essential for any future looking engine.

In my PhD work I came up with a very, very basic differentiable renderer. The idea that an AI could learn to generate 3D scenes is really interesting. It seems that Differentiable Rendering has taken off, with a number of approaches out there. This is something that I'd like to aim for, but it might be quite difficult and so I'm putting this down as more of a next-step, if I get chance.

I've seen this sort of thing called Inverse rendering, though that's perhaps a slightly different thing. I need to investigate more but it's definitely on my horizon.

Now we know what we want from our engine, we can begin to get some concrete requirements

Furthermore, if I have time, I'd like to include:

I'm pretty sure I won't get to these and they don't quite seem necessary, given our goals but you never know. There are some things I'm not going to focus on initially, but a more professional engine would certainly include:

It all sounds like a lot doesn't it? But there's a lot of support out there, with many libraries available for free. There's plenty of books, tutorials and existing engines one can learn from. Not only that, things like animation with bones, cameras and scene graphs I've done before so I can just reuse these (I hope!).

I've decided to use the Vulkan graphics API instead of OpenGL or Metal. The reason being is that Vulkan is cross platform and modern. I've no interest in Apple's walled garden anymore and as much as I love OpenGL, I think it's passed its prime. It's had a good long run though, and is still in use in various contexts. Vulkan is known to be difficult, with many lines of code required to get even a simple triangle on screen. But I've managed this before and I think I can get my head around it all. I used Vulkan when I was Spotting Seals in Sonar. Turns out that Vulkan is very quick at generating sonar images from tables of numbers.

Secondly, I'm going to use Rust as the programming language. I can hear the cries already, but hear me out. I think it's fair to say that the writing is on the wall for C and C++. There may be other low level languages with good support for Vulkan and underlying GPU hardware but I do think that Rust has some lovely features that I'd like to take advantage of, chief of which is the way libraries are managed. Not only that but I quite enjoy writing Rust and I've no idea where C++ is going these days. It seems to me (and others) that it's lost its way somewhat. I don't want to have to battle with CMake or Meson anymore (though I do quite like Meson). Besides, I've already written part of the engine in Rust already. I think it's good to learn a new language now and then, and since Rust is getting traction, it might be a good idea to at least have some experience in it.

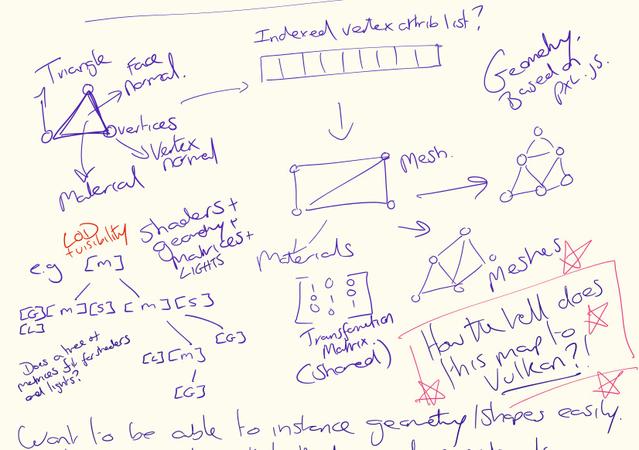

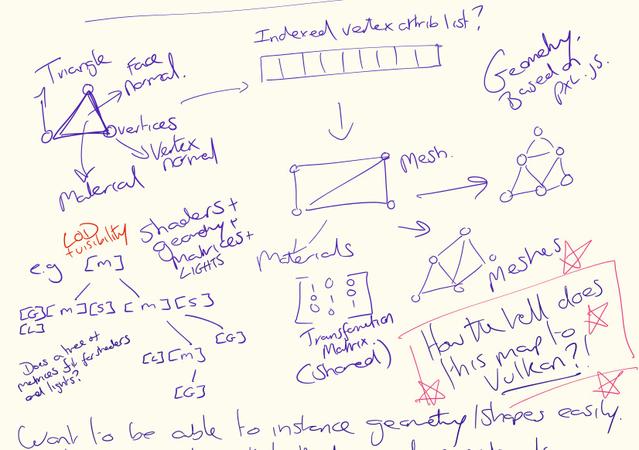

The overall design will follow a hierarchical scene-graph. The idea of a scene-graph is to describe your world in terms of the positions relative to each other. For example, you might have a room, rendered at the origin of your scene. Within that, you might have a table, which is a child of the room. Any changes to the room, such as it's size or position, would affect the table. It's a fairly standard approach, but it works well from the perspective of matrix transformations and shaders. You can traverse the graph to build the transformations and create a list of the operations you need to through at Vulkan. The scene graph can be re-ordered and changed on the fly.

Nodes in the graph will always contain a matrix - a transformation. They can also contain geometry, a shader and perhaps extra parameters for said shader. Mostly, it's a way of specifying the spatial relationships in a scene, culling geometry perhaps, if needed. Indeed, I suspect the LOD and BSP trees will come in here. Branches of the tree can be contained in a BSP if needed.

Mapping a scene graph to Vulkan is a really tricky thing. Vulkan is a complex library - it has to be. It's an attempt to unify graphics programming over a wide range of devices. I plan to have some sort of graph traversal algorithm that runs over the user's scenegraph and creates a set of vulkan scene blobs (if you will) which Vulkan can then render. For example, say that you have 3 objects in your scene, but one of the child objects needs a different shader. Well, that would entail a different Vulkan graphics pipeline to be defined and is therefore a separate draw call, but that blob would inherit the same parent matrix transforms as the other blob.

I'm still working all this through mind you. Descriptors, Descriptor Pools, Fences, Command Lists... it's a baffling system but it's beginning to make sense to me.

Often, we need to render the scene with a particular shader, to a texture. This is quite common. Indeed, if we want a deferred engine it is essential. For example, having the depth of the scene available when you do your final rendering allows for things like Screen Space Ambient Occulsion and many other effects. Actually, rendering to a special depth buffer is something we'll probably always want to do, and it requires some extra support from Vulkan. There are special functions and patterns for this sort of thing that we'll need to look into.

Loading geometry will be less of a vulkan thing I suspect, so long as the geometry is put into a reasonable format. I'll need to decide on a small number of vertex formats. For example, a vertex has, at minimum, a 2D position. But it's likely we'll be using a 3D vertex much more often. There will often be a colour or a texture co-ordinate (maybe both). If we want to do something like flat face rendering, we might need to support face colours, instead of vertex colours (it can be a nice effect if you want to go a bit retro). Geometry consists of vertices but also vertex indices and a way to join these vertices up, such as triangle fan or triangle strip. At first, I'm only going to support triangles, not fans, strips or similar. Keep it simple.

We live in a time where we almost have too many resources to choose from (although mentors are probably in short supply, but that's another story). There are so many books, websites and tools out there. But I've settled on a couple to help me out:

There are a number of Rust libraries out there that seem quite handy. I've started using the following:

I use Visual Studio Code for most things these days. I'll probably continue to do so. I know there are some good Rust IDEs out there and I might give them a go, as integrated debuggers are really handy! In terms of GPU and debugging tools, I'm really not quite sure what is best, but I'm sure I'll find out.

I think it's good to have a deadline and a goal. It helps get things done. In the past, the end has always been nebulous and vague, with no timeline set. I intend to set out differently this time.

In my mind's eye, I can see the kind of game I'd like to make. Remember these old school dungeon crawl affairs? You'd move one square at a time, turn 90 degrees, that sort of thing? At the end of 24 days I should have at least have the tools to make such a thing, minus sound and cross platform support. I'll need to render 2D as well as 3D, models and text, and the ability to click on things. It sounds like a lot but the path is mostly clear. That's a start. I've already made a start on some of it. I've some experience, admittedly from the far past, but it's not nothing.

Now the actual deadline is the end of September, so October the 1st let's say. I may get a couple of extra days here and there perhaps, but this is the hard deadline. It might be possible to extend things after that, but let's assume that's not going to happen. If I can put together a simple game from the tools I've made, I'll consider it done.

Once a week, I'll have a day to work on this. That's 7 hours roughly, maybe more on good days, maybe less. I might add a day some weeks, or a few hours. I intend to keep notes each day, just as I do at work. In fact, I'll be treating this project like a professional project as much as I can.

The first stage will be to look over my existing code base. I've put together a plan of how the Vulkan parts fit together - I can render a flat shaded model from an obj file to a screen so far. So lets start with that and get a rough breakdown:

At this point we should be able to load a model, with textures, to the screen and add things to the graph. This will be a good spot as changing basic things on the fly is a tricky thing to do in Vulkan I've found. Getting around that will be a good step.

At this point, we can start looking at the render target ladder and the 2D elements:

This stage might be the hardest - shadows and lighting.

The last stage will involve adding some interactive features:

That's as far as I've got at the moment. I'll write up my progress every couple of weeks I hope. That feels like the right sort of frequency, but we'll see how it goes.

I've decided the name of the engine will Earendel, an Old English word that translates as a shining ray of light, particularly in the morning. It's also an Anglo-Saxon mythological figure and a star, according to Wikipedia. A character from the Lord of the Rings world is named Earendil. It's a word that has a connection to me personally. I think a good name is important - when a thing has a good name it takes on a life of its own and I'm more likely to complete it as a result. It has some strong imagery associated with it, which helps.

Let's come back to where we started. Why do this at all? Well, I think we all get the urge to create something at various points in our lives. Sometimes we fulfill them, other times we don't. I keep coming back to computer graphics but I've never gotten serious about a graphics engine, save for perhaps my WebGL one, and that never really had much of a plan behind it. This is a challenge to myself - can I actually do it? But it's also a way to keep current, learn about new programming languages, techniques and practices. Sure, if what you want to do is make a game, then Godot, Unity or Unreal Engine are the ways to go, but I want to train my brain so I can deliver good quality code and useful tools. Maybe I'm behind the curve a little bit, what with A.I on the horizon, but I think keeping your engineering mind sharp is no bad thing.

Yes, it's perhaps a bit selfish - the only person who needs this is me - but then I'd be very surprised if no-one else felt this way sometimes. Sometimes, you just have to make a thing. Besides, it's only for a small number of days. I'm excited! I'd best get started!